Return to the Rossi Award page.

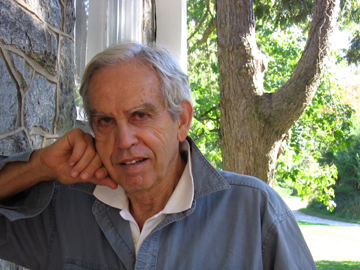

We are delighted to announce that Rob Hollister

of Swarthmore College has been selected to receive the 2006 Peter H. Rossi Award

for Contributions to the Theory or Practice of Program Evaluation. (To read his acceptance

remarks, please click here.)

In the words of one nominator, “Rob’s work has made major contributions

to our understanding of the cost-effectiveness of job training and public employment

programs, income transfer programs and welfare reform, community health initiatives,

educational and child care initiatives, and community development financial

institutions. In addition, Rob has written extensively on methodological issues

related to program evaluation, with a special emphasis on random assignment

studies, econometric approaches intended to correct selection biases, and the

evaluation of community-wide initiatives. In all cases, Rob’s work has

been at the scientific frontier. It is also balanced and reflects his good judgment

about where it is appropriate to devote substantial resources to program evaluation

and where it is not.” The selection committee was also impressed that

“Rob has been a devoted teacher who has introduced generations of students

to the intellectual challenges related to program evaluation. In fact, many of

his former students are now prominent professionals in the field.”

Rob Hollister’s first academic job was as an assistant professor of economics

at Williams College from 1962 to 1964. He left Williams in 1964 to carry out

a study for the Organization for Economic Cooperation and Development (OECD)

in Paris. This, his first major policy evaluation, was of methods used in the

OECD’s Mediterranean Regional Project, a major educational planning effort

covering Greece, Italy, Portugal, Spain, Turkey, and Yugoslavia. The study was

published in 1967 as A Technical Evaluation of the First Stage of the Mediterranean

Regional Project.

Hollister joined the War on Poverty effort in 1966 as a staff economist in

the Office of Economic Opportunity (OEO), later becoming its chief of research

and plans. The OEO was developing a “Five Year Plan to End Poverty,”

which included a plan for a national Negative Income Tax (NIT) program to replace

the old welfare (AFDC) program. In order to estimate the cost of an NIT, OEO

funded the New Jersey Negative Income Tax experiment, the first large-scale

use of a random assignment design to assess the likely impact of a public policy.

Hollister headed the effort to design and implement the experiment.

In the fall of 1967, Hollister moved to the University of Wisconsin as a member

of the Department of Economics and the recently created Institute for Research

on Poverty. From that base, he continued work on the New Jersey Negative Income

Tax and participated in the creation of new experiments in that domain.

In 1971, Hollister joined the faculty of Swarthmore College as an associate

professor and has been teaching there ever since. He is now the Joseph Wharton

Professor of Economics.

Hollister worked with the Ford Foundation on developing the National Supported

Work Demonstration, a random assignment design study testing, in fourteen cities

across the nation, a direct employment program for ex-addicts, ex-offenders,

women on welfare, and low-income youth. MDRC was created to implement this project.

Mathematica was selected to carry out the evaluation. Hollister was co-principal

investigator for that evaluation, which took five years to complete.

From 1983 to 1985, Hollister was chairman of the Committee on Youth Employment

Programs of the National Academy of Sciences. The committee reviewed what had

been learned about the effectiveness or ineffectiveness of youth employment

and training on the basis of various program efforts during the 1970s. He coauthored

the committee’s 1985 report, Youth Employment and Training Programs: The

YEDPA Years.

From 1984 to 1993, Hollister worked for the Rockefeller Foundation on the Minority

Female Single Parent project, an employment training effort for minority female

single parents that took place in four cities. On Hollister’s recommendation,

the evaluation was shifted from a quasi-experimental design to a full random

assignment experimental design. In three of the cities in this experiment the

program generated no statistically significant effects on employment or earnings,

but in the fourth, the Center for Employment and Training in San Jose, California,

there were statistically significant impacts on employment—which were

the largest in magnitude found for any employment and training program in the

1970s and 1980s.

From 1991 to 2000, Hollister worked on the development of the New Hope Project.

This was an experiment run in Milwaukee, Wisconsin, that sought to test a package

of benefits designed to “make work pay.” He developed the design

of the basic benefits package and participated as a member of the National Advisory

Committee to the project, chairing the committee in its final years of operation.

From 1994 to 2004, Hollister chaired the Advisory Board for the Evaluation

of the Health Link Project. This Robert Wood Johnson Foundation project was

an intervention that attempted to help women and youth prisoners exiting the

Riker’s Island jail in New York City. Hollister and the committee members

convinced the foundation to turn away from a proposed quasi-experimental design

to a full random assignment experimental design, which was conducted by Mathematica

Policy Research.

As is apparent from this short summary of his career, Hollister has been a

strong advocate for the use of random assignment experiments to test various

aspects of public policy, but he has also often written about circumstances

in which the experimental approach is unlikely to be feasible and tried to assess

the relative merits of alternative evaluation methods. One widely cited work

in this regard is “Problems in the Evaluation of Community Wide Initiatives”

(coauthored with Jennifer Hill). The authors point out the problems that arise

with attempts to estimate the impact of multifaceted efforts in which the whole

community is saturated with the program treatment. Emphasizing the inability

to get a reliable “counterfactual,” the authors reviewed a number

of alternative observational and quasi-experimental methods that have been tried

and concluded none had been shown able to establish causal impacts.

In 1995, Hollister, along with his Swarthmore College colleague John Caskey,

began work in a new area: the assessment of community development financial

institutions (CDFI). He and Caskey carried out evaluative studies of the Delta

Initiative, which involved the creation of the Enterprise Corporation of the

Delta (ECD) and Community Organizing for Work Force Development (COWFD). ECD

is a community development financial institution serving the Delta regions of

Mississippi, Louisiana and Arkansas. They sought to assess the effects of ECD’s

financial and technical assistance to area businesses—using methods that

were decidedly non-experimental. They built a database on the employment, wage

and benefit packages of firms in the ECD loan portfolio and how they evolved

over time; and from that made alternative estimates of jobs gained, retained,

or lost in these firms. From these data they derived alternative estimates of

cost per job gained or retained.

Following this project, Hollister and Caskey carried out a more generalized

study of methods for evaluating the social impact of community development financial

institutions. In a series of reports to the Ford Foundation, they reviewed the

strengths and weaknesses of various evaluation methods that might be applied

to attempts to evaluate the performance of CDFIs in various dimensions of outcomes,

both financial and social. (Hollister also has worked as a consultant on several

evaluations done by Coastal Enterprises, a large CDFI that serves the state

of Maine. )

Most recently, Hollister has been working on evaluations in the field of education.

Hollister assisted a Swarthmore student, Ty Wilde, in a paper assessing the

effectiveness of Propensity Score Matching (PSM) methods. Using data from the

Tennessee Star experiment in class size, they assessed PSM’s effectiveness

by comparing the impact estimates using PSM to the “true impacts”

estimated with the Star experimental data.

As part of their paper, Wilde and Hollister discuss criteria for assessing

“how close is close enough” for non-experimental methods; they discuss

various criteria that might be applied to decide whether a given non-experimental

method provides estimates that are “close enough” to the estimates

derived with experimental methods to provide a reliable basis for decision making.

This is part of Hollister’s continued search to see if a method for estimating

impacts can be identified that could be considered “second best”

to a random assignment experimental design. In this example in education, Propensity

Score Matching was not a clear candidate for “second best.”

Recently, Hollister has worked as a consultant to the Institute for Education

Science of the U.S. Department of Education, assisting its efforts to bring

experimental design assessments into many areas of educational programming and

training.

Hollister currently is completing a book entitled Nectar of the Gods: Social

Experiments and their Flawed Alternatives, which will include chapters on the

Negative Income Tax Experiment, as well as discussion of other experiments,

and evaluations of alternative methods of attempting to assess the impact of

social programs.

As one of Hollister’s nominators concluded: “Rob has been a beacon

of high standards in the field of evaluation, where standards are a political

football. He has had a great effect on the shape and implementation of evaluation

policy in both job training and education. Rob is one of the most tenacious

advocates of random assignment there is today.”

Back to top